It has been a while since I’ve posted here, and period of great turmoil in my life. Now that things have settled a bit, I can start to gradually bring together all the ideas I’ve gathered in the past month. All in all, I am constantly in a cheerful mood and now that spring (mm..ok, maybe summer?) has finally arrived I was eager to write a quick article on playful ways to deal with satellite data.

Earth Observation is still a mystery subject for the majority of people. Everybody has that aunt that is greatly impressed when you talk about the way you study the Earth using images captured by a satellite up in the sky, right? Yet, we all use it very often. See satellite basemap in Google Maps or Google Earth. Yes, your friends might be fascinated about this and consider it highly technical, suited only for engineers or people with a good scientific background. No, satellite imagery use is no longer restricted to a handful of people. Thanks to some innovative startups, new applications on the web allow everyone to get their hands on EO data and quickly create something interesting to visualize.

I love the idea of satelite data getting more popular among non-scientists and I firmly believe that this is something worth sharing. I had this idea a couple of weeks a ago, when I first came across one tweet advertising a new mobile application called SnapPlanet. The app allows users to quickly create snapshots or animated GIFs using Sentinel 2 imagery. You can zoom in to any place on Earth (searching, using the random button or pinpoint to your location), choose your level of detail and control the bounding box shape and size. The next step is dedicated to the kind of snap you want: animated or static. Depending on the type, you’ll have custom features. For example, images have an option that allows users pick a band combination (although the exact ratio is not specified the ratio is specified and there is also a small description about the uses of each ration) and GIFs can have different levels of illumination and speed (L.E: and also band combinations). The neat thing is for both types, the first image to appear is usually the one that enlists the “best” atmospheric conditions, but don’t worry, you can browse the whole archive for that specific location, using the timeline and suggestive weather condition icon. GIFs are unfortunately limited to 8 selected pictures. With their latest build and application update, GIFs are no longer limited to 8 selected pictures. When you’re done, a friendly bot will let you know when your GIF/image is processed and ready for sharing.

The great thing is that the images appear to have a certain enhancement or some sort of basic atmospheric correction. Also, the service is quick to deliver, glitch free, available for IOS or Android terminals and they also accept suggestions for future releases. You can share your results on all common social media channels and you’ll also have a personal profile to keep track of all your creations and others’ as well. There is also a search section, that works fine, with a lot of options to find interesting imagery and see and appreciate what other users did. The only problem…the app is new and seems to be popular only among remote sensing enthusiasts, but maybe it will be more populated soon.

Edit: While doing the snapshots for this post, I’ve also found out they will also include a new interesting bathymetry filter.

Later edit: Right after publishing this article, I’ve upgraded to the newest version of SnapPlanet, which has a lot of new options. Some of them are highlighted in the article in italic as a correction. Others, such as the addition of new filters (a whole plethora of them!!!) and some new features for GIFs , are also indeed useful and nice. Not only a new bathymetry filter, but also a lot of new band combinations for geology, vegetation and urban areas are added. And the remarkable thing is that all these were based on users suggestions and work seamlessly. I think Jerome does a great job!

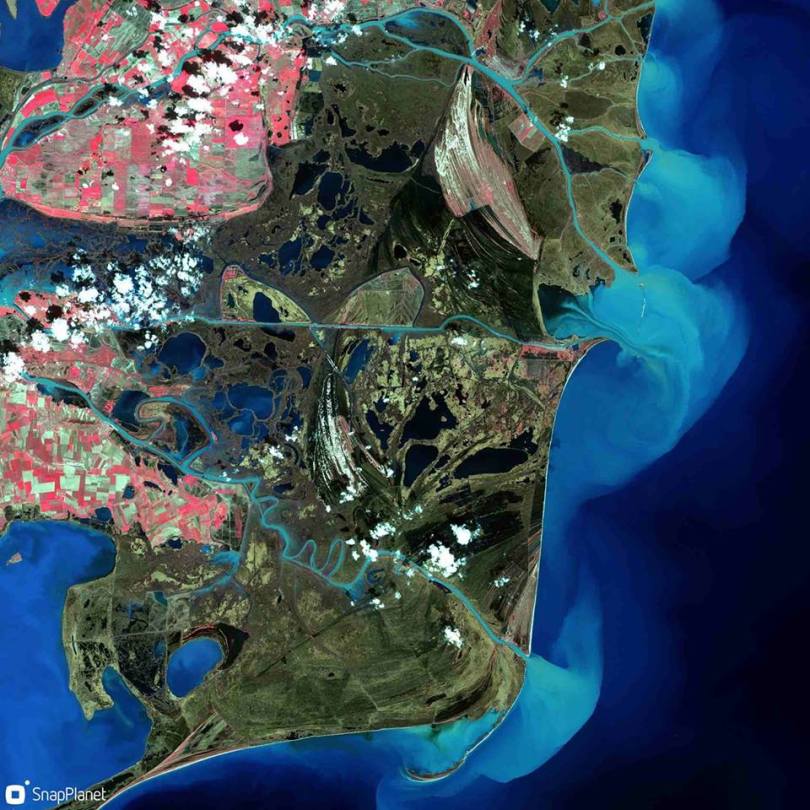

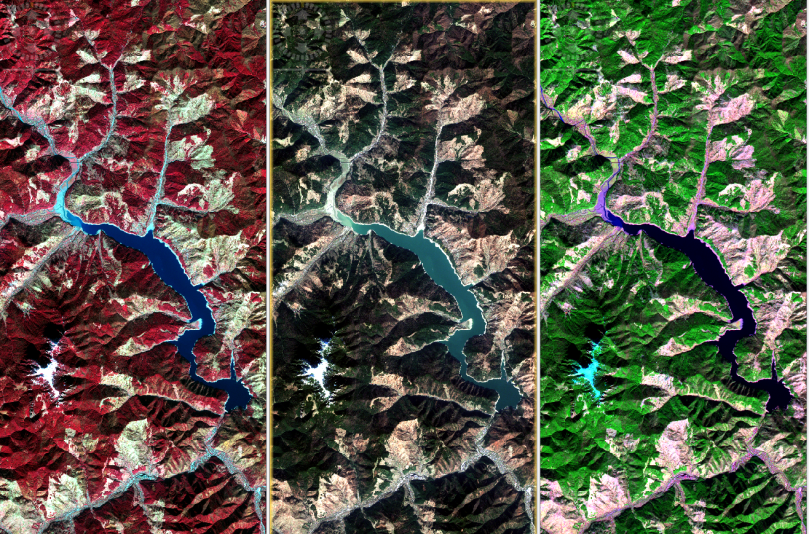

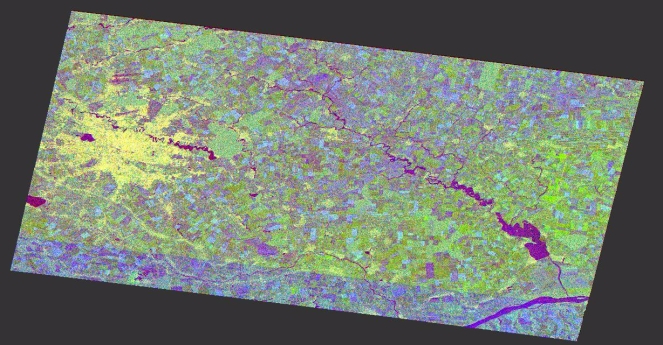

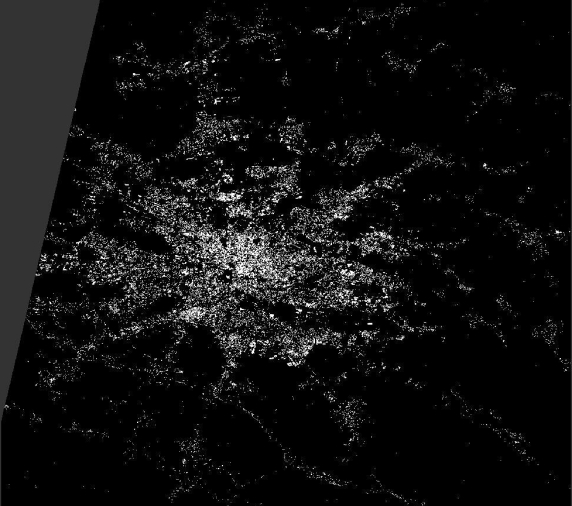

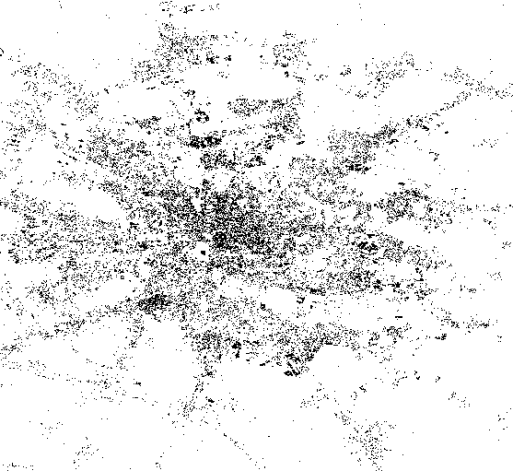

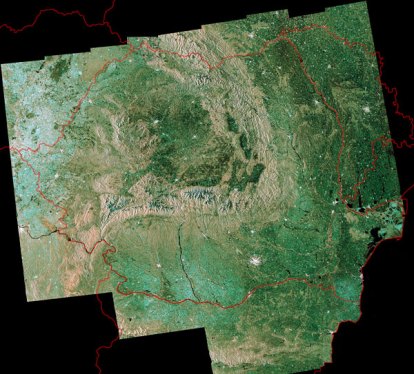

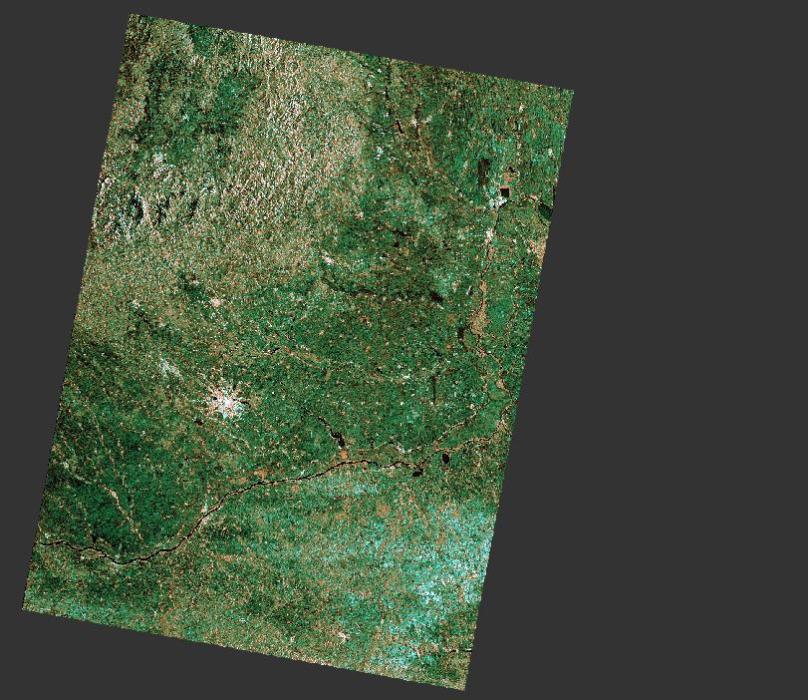

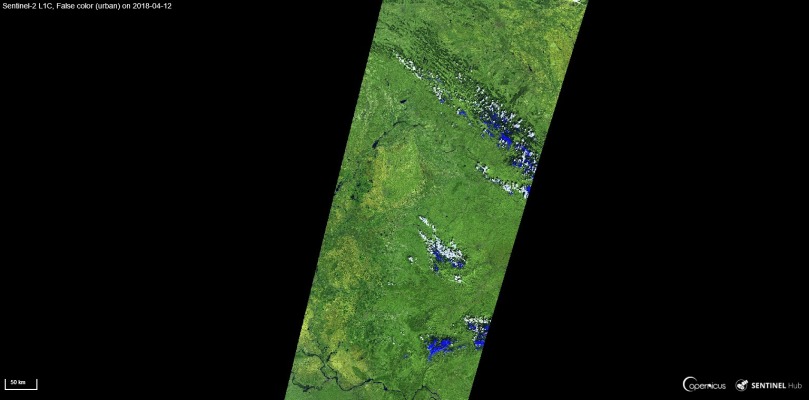

From my point of view, this is a great way to make satellite imagery easy to use even for those who are not proficient with this kind of data and there are a lot of interesting things to discover interactively. For example, a GIF can be useful in spotting changes in land use between seasons or years and band combinations can also reveal a lot about the unseen features or behavior in our environment. Take this picture that uses the near infrared band as a replacement for the red channel and you can spot the flooded areas, the cultivated land and the sediment flow in the Black Sea, in the Danube Delta. And you don’t have to be a scientist to do it!

Level of difficulty: 1 out of 3

If you liked this picture, a more advanced web map of changes in the Danube Delta, can be found here. It is curated by my master thesis supervisor, Stefan Constantinescu, and features some satellite imagery and data for monitoring the Danube Delta environment.

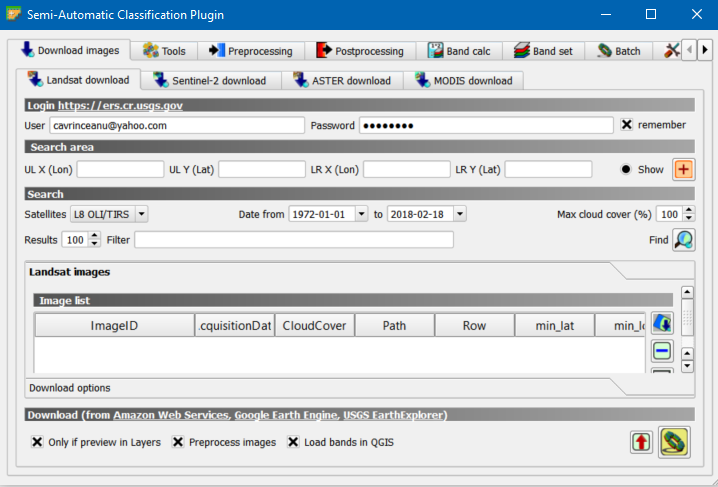

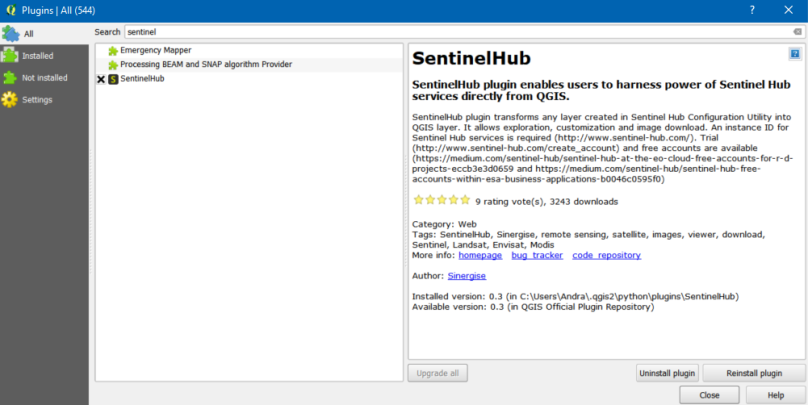

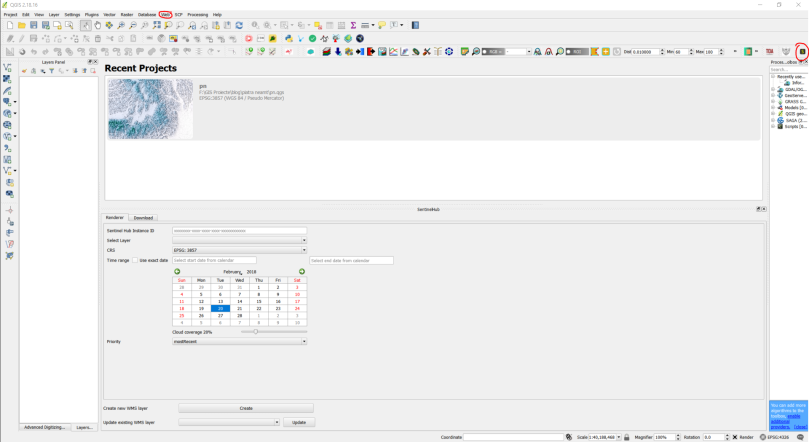

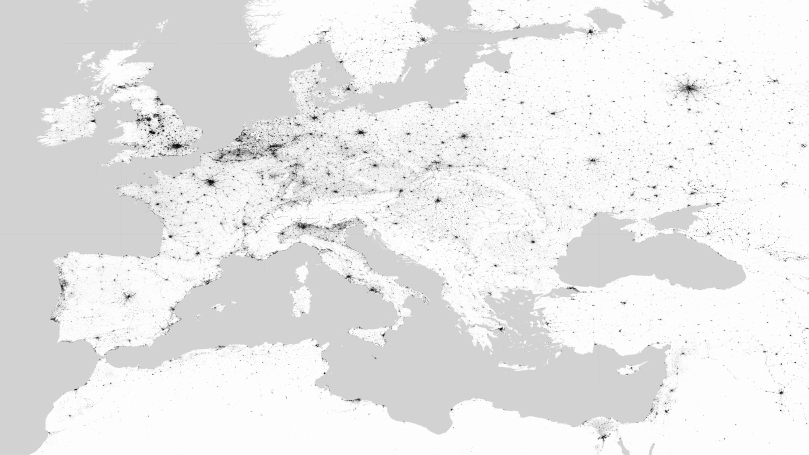

My second option is a bit advanced and it is called Sentinel Playground , developed by Sentinel Hub. Here, you can play with full resolution, up to date data from Sentinel 2, Landsat, MODIS or even a Digital Elevation Model (DEM). It opens up on your default location, but you can search for any place on Earth. It has a plethora of possible band combinations to choose from and one nice thing is that they are also explained. Much to my delight, there is a geology band combo. DEM’s can be delivered in four palette options: greyscale, colorized, sepia or custom.

For those who are not so familiar with band combinations, ratios or index calculations, a simple explanation can be found googling the principles of optical remote sensing. Long story short, when satellite pass over a certain place, they take a group of grey scale pictures (scene), each representing the way that the satellite sensor captures the reflected light of a given wavelength. For example, plants absorb most of the red and near infrared light, but reflect more in the green part of the spectrum. This is the reason why we see them in shades of green and why they appear brighter on the green band snapshot. When taken separately, a scene’s package of pictures taken on different wavelengths, usually tell us less than a natural color (RGB), a combination of the red, green and blue bands, or a false color (any combination of bands replacing any of the channels in the traditional red, green, blue combination). For example: near infrared as red, red as green and green as blue, will give us a better idea on crops and it is suitable for agriculture studies. Band ratios represent mathematical operations done between different bands and indexes are combinations of such mathematical operations. They are used in more complex analysis. For more information on Sentinel 2’s bands here’s a wiki. For L8 or MODIS sensors, more information can be found browsing through using the links above. For a quick remote sensing lesson, give this a go.

But the neat things don’t stop. There is a dedicated Effects panel, where you can choose from options such as Atmospheric Correction, Gain or Gamma, enhancing your image as you wish. There are features as well: searching by date in a calendar pane, adjusting your desired cloud cover level, so you can pick the best scene for you. Also, switching between different types of sensors can be done using the satellite button.

When you’re happy with all the adjustments, click Generate. A new window will open with a full resolution preview of your image. You can download, copy the link or share it through your social media accounts. Or embed it using the code snip provided with the key button, or integrate it in a certain web application in the same way, or…

I agree this is a bit advanced for common users, but it is still a great tool and does not require much knowledge on how satellites work or how imagery is processed. It’s main purpose is also more of a quick tool to create basemaps for bragging on the internet, spotting changes, make a rapid, rough, visual analysis using the spectral information or generating a code in a quick manner.

Level of difficulty: 2 out of 3

Sinergise’s Sentinel Hub has other interesting products. EO Browser for example, is a more complex one, suited more for scientists and less for the general public. But this doesn’t mean that you can’t obtain a nice result with minimum of effort and knowledge. Let me show you how!

The post that has driven me to this solution is this one. The people at Sentinel Hub use Medium a lot for sharing stories about their products, use cases and new updates and features. They also explain how they do all the processing with minimal resources and why they do it. If you are passionate about remote sensing, this is worth a follow and read.

First of all, you’ll need an account. You can create one following the instructions detailed here. Even though the Configuration Utility Platform rights will be revoked after 1 month, you can still use the credentials for logging in to EO Browser.

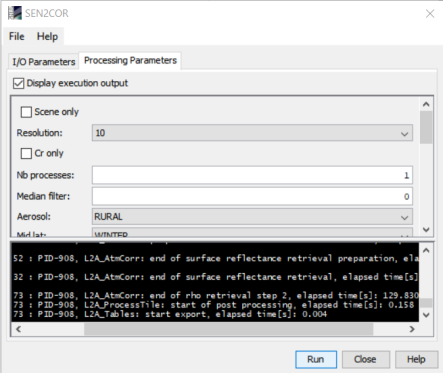

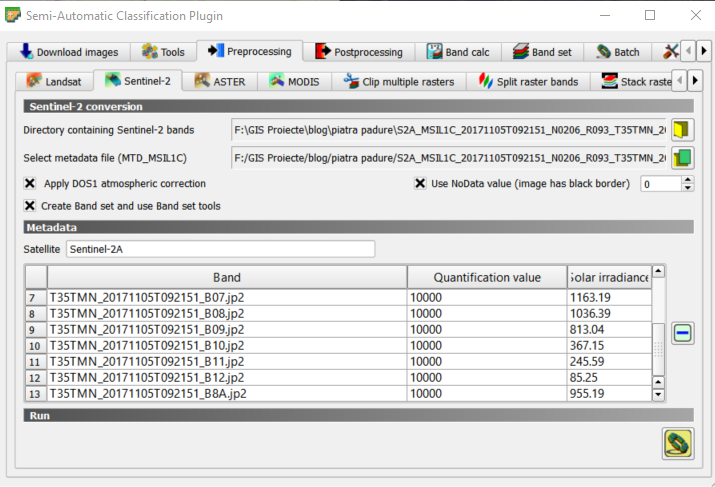

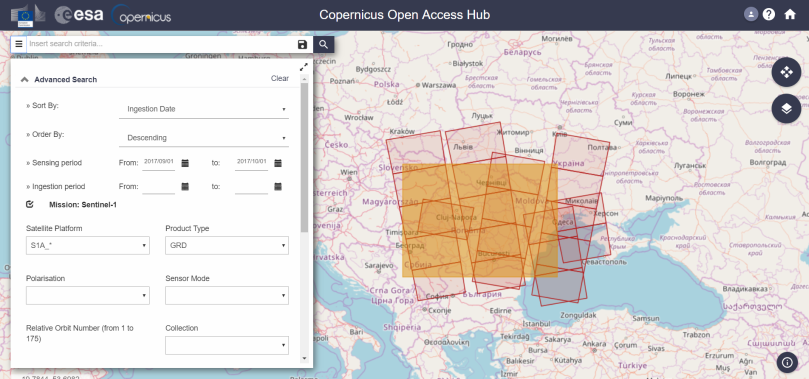

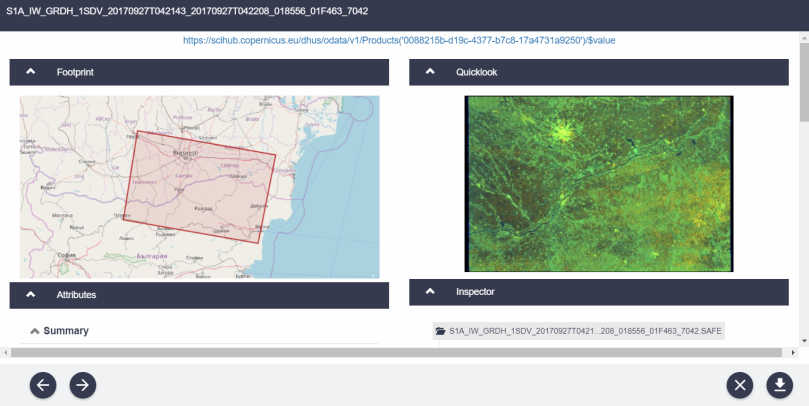

Second, go to the search bar and look for the place you desire or zoom and pan on the map until you get there. Additionally you can upload a KML/KMZ file or draw an AOI (area of interest). Next, you’ll need to choose one product from the panel list. For this article’s purpose, I’ll keep using Sentinel 2, but feel free to check the other products options as well, the list is quite comprehensive. Because it is faster and generates previews in a simpler manner, I’ll go for the L1C product. I will also set my cloud cover to desired percent (10% for me) and a time range large enough (maybe from the 1st of January?). Hit search, and you’ll be redirected to Results.

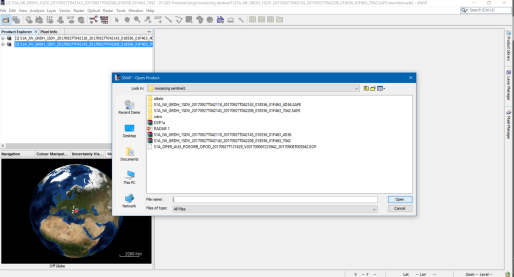

Choose whatever scene suits your taste by reviewing the attached information. This will pass you over visualization. Here, things are similar to the previous example (Satellite Playground). You can choose a band combination from the list (although not explained) and after processing (takes seconds, but it depends on your connection), download the result.

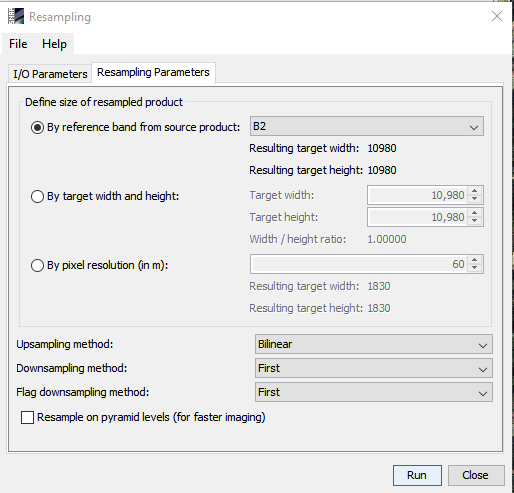

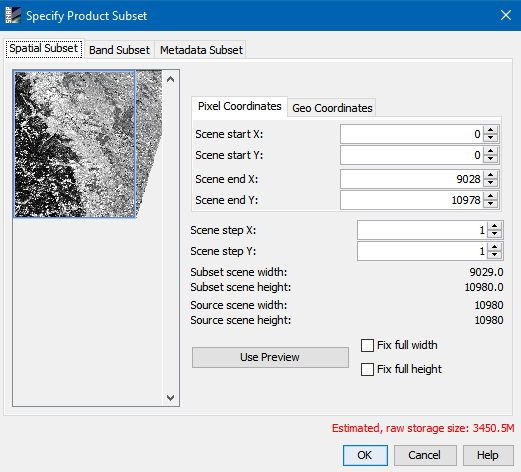

If you feel more confident and have some EO skills, you can also enable analytical mode, where you can customize the download and choose between a greater number of options: image type, resolution, projection and bands. Depending on what you’ve selected, the process will take longer. I tried with the following settings: TIFF 16 bit, High res, Web Mercator, B2, B3, B4 (blue, green, red). It gave me a 11 MB archive and the content is mostly useful for the same, rough, visual analysis, as it does not have any data attached. So far, everything is similar to the Sentinel Playground.

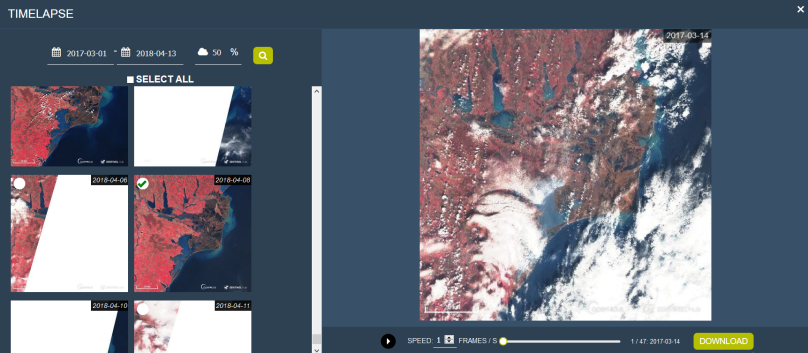

One reason why I chose to feature EO Browser is its latest update that allows users to create GIFs. GIFs are nice, are eye catching and can also reveal a lot of interesting features, mainly if we think about changing elements. In EO Browser, the neighboring button helps us create such an animation. Select the dates and the cloud cover percentage and browse through results. I’ve done my demo on the Danube Delta, and kept only those scenes depicting the entire area.

Adjust for the speed and voila!

What I like best in Sentinel Hub’s GIF is the fact that you can choose more than 8 frames. I don’t particularly fancy the logo stamps, but credit must be specified. It was quick, easy to use and spectacular in result. You can now share your newly created image or GIF with the rest of the world. :)

Level of difficulty: 3 out of 3 (only because it is more complex and has more sensors and features)

In conclusion, satellite data is not scary and everyone can create something meaningful and interesting, even perform a raw analysis within minutes. I absolutely love these simple ways of advertising the beauty of our Earth and make us aware of the changes that happen around us every single day. EO data is beautiful and has the power to move people when displayed in a exciting, comprehensible and accessible fashion. Using these web and mobile apps we can play, learn and share information on our planet, with only a couple of clicks and this is thanks to today’s technological development, innovative missions, people and businesses. Space is fascinating, data is frightening for the majority of people, but combining them shouldn’t necessary be hard. It is easier for us to communicate science and raise awareness on our habitat by making it accessible to non-traditional users. These apps can become fantastic learning teaching tools or creative ways to generate content and reasons to discover the world. The point is to become more knowledgeable and conscious about the surrounding environment, about Earth and science and about the future.

Cheers,

Cristina

P.S: I am sure there are many more such tools. I would love to hear your suggestions and stories on how you’ve came across them, used them, what results did you get, how you and the people reacted when visualizing them, what was easy or hard in the process and what you’ve learned. Feel free to drop a comment below and help me with some valuable info of your experience.